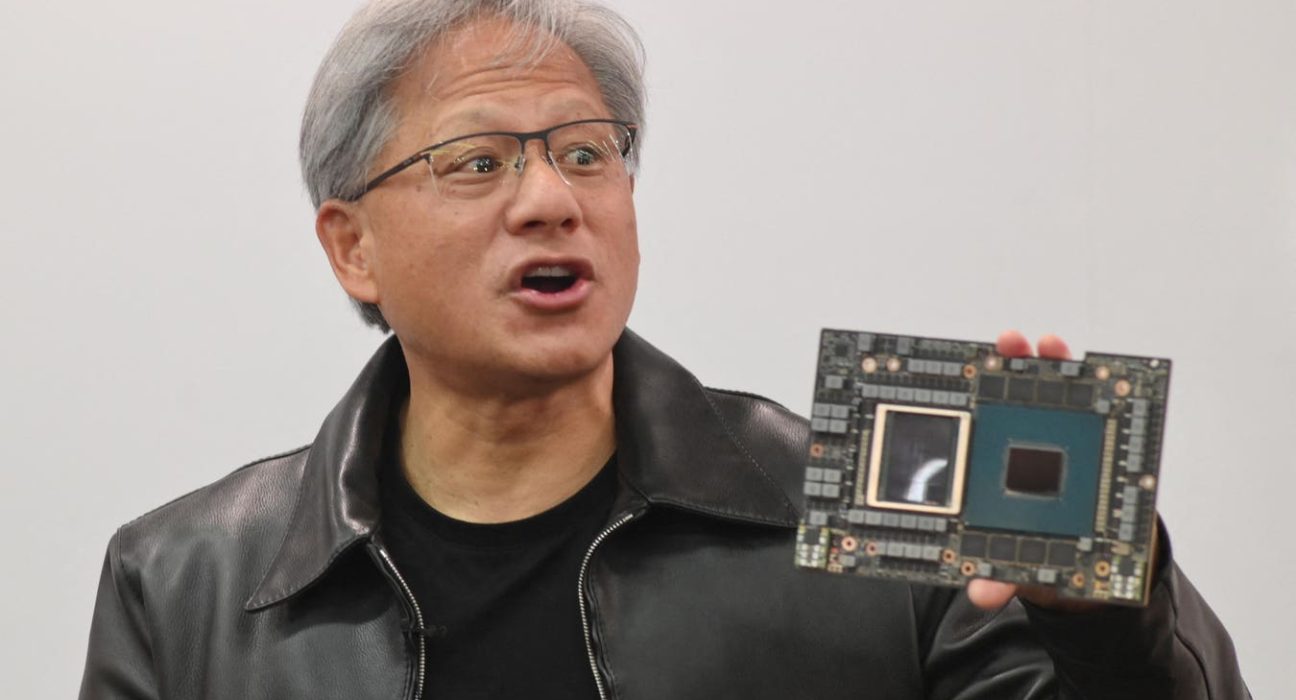

Nvidia CEO Jensen Huang shown here holding a CPU designed for AI data centers. The company’s A100 and H100 GPUs have been in even higher demand among AI companies.

AFP via Getty Images

Last year, entrepreneur-turned-investor Nat Friedman found himself unwittingly becoming a full-time computer chip broker for upstart AI companies. “There were weeks where I spent most of my time finding GPUs for people,” he told Forbes, referencing the graphics processing units which power AI data analysis. “Asking founders what they needed help with, that was problem number one.”

Friedman, the former CEO of Microsoft’s developer platform GitHub, and frequent investing partner Daniel Gross, who sold his first startup to Apple, were calling in so many favors to chip providers that by midyear, they decided they would be better off hiring and team of engineers and spending nine figures upfront to build their own supercomputer. They now run the Andromeda Cluster, their name for several computing stockpiles totaling more than 4,000 GPUs, which they make available for use by companies in their portfolio for a fee below market price. Since its announcement last June, other tech investors have followed suit with offerings to support companies. Index Ventures announced a program last fall to give startups free access to a cluster run by Oracle. Microsoft has set aside several thousand chips for use by startups in its venture arm M12, the startup accelerator Y Combinator and a handful of other early stage funds. Conviction Partners also manages a smaller cluster, founder Sarah Guo told Forbes.

Andreessen Horowitz is negotiating with chip providers to set up a compute program, according to three sources. One person with direct knowledge said the firm is targeting tens of thousands of GPUs for an offering that “will make everything else pale in comparison.” The firm declined to comment.

“It’s important for the Silicon Valley ecosystem that the overachieving outsider and the person working at Google have access to similar amounts of opportunity and compute,” Gross told Forbes in an interview. “That was just not the case in AI.”

“Why is an industry so close to cutting edge tech so stagnant?”

As GitHub CEO from 2018 to 2021, Friedman was in charge of accumulating upwards of 4,000 GPUs to create GitHub Copilot, a tool that helps software engineers write code that is today one of the generative AI’s first commercial successes with revenues north of $100 million. The demand has only intensified as the chips came to serve as the backbone for training AI models such as OpenAI’s ChatGPT and its successor GPT-4. Last month, Mark Zuckerberg announced plans to amass 350,000 H100s (Nvidia’s top-shelf chip which retails for more than $30,000 a pop) by the end of the year to advance Meta’s AI efforts. The fervent demand propelled Nvidia to a trillion-dollar market cap. Meanwhile, Google, Amazon and legacy semiconductor firms like AMD are scrambling to mass produce their own AI chips, but are running into supply chain constraints as they are all beholden to a single semiconductor producer.

The tight supply has in turn bottlenecked technical progress. Startups, in particular, are at a disadvantage. Even if the likes of Microsoft Azure or Amazon Web Services had available computing capacity to sell, they are more likely to do business with a large customer. “The way you book a non-trivial chunk of GPUs is you have to book them for multiple years at a time and pay some non-trivial chunk upfront,” Friedman said. “If you’ve only raised in the low tens of millions, you can’t even do that.”

Andromeda, then, effectively functions as the large customer capable of shouldering a long-term contract. Friedman and Gross split access to it across dozens of portfolio companies including voice cloner ElevenLabs and video generator Pika, and bill for it based on usage. “We charge a really small margin to cover our operating costs, but we make our money by helping the startups succeed,” Friedman said. The pricing was competitive, Pika CEO Demi Guo said, allowing the startup, which initially only focused on building an engine to generate anime-style videos, to experiment with realistic videos. Being able to do so expedited the company’s progress at a time when Guo said many of her AI startup peers were treading water waiting for compute access. Gross calls it a “time dilation mechanism”: “The thing that you need more than anything is time. The compute serves as a way to accelerate time where you can take a model that was going to take a year to train and have done on our cluster in a week.”

But to run a cluster is no small undertaking. Friedman and Gross paid nine-figure upfront costs to lease the chips, most of which come via cloud computing provider CoreWeave, a $7 billion startup they backed. The duo is also on the hook for upkeep. That required hiring a team of engineers to manage the supercomputer and develop software to let startups self-schedule bookings (Friedman and Gross contributed some of the code). Also necessary: contractors to monitor the data center for GPUs that burn up and die.

All this experimentation is possible, Gross said, because he and Friedman have a “significantly higher” proportion of their personal money invested into their AI-focused practice compared to industry norms. Not being so subject to the expectations of limited partners has offered them freedom to subvert the standard venture capital playbook, he said: “Why is an industry so close to cutting edge tech so stagnant?”

Nat Friedman (left) and Daniel Gross (right) launched the Andromeda Cluster, which now contains more than 4,000 GPUs, last summer.

Nat Friedman and Daniel Gross

“It’s an industrial scale operation. It requires late nights, early mornings, sleepless weekends,” Gross said of the Andromeda cluster. “If Nat and I didn’t have a decade of experience running software and hardware projects, it’d be tricky to run.”

That’s one reason other firms’ efforts to provide compute support have been more modest. Index pays Oracle to manage the infrastructure. “To go and have to hire an entire team of people to manage a cluster of servers just doesn’t seem like the best use of our time,” partner Erin Price-Wright told Forbes. Index’s offering serves as a no-cost entry point that companies use for about three months for testing purposes so they can better forecast how many GPUs to buy later on, she said. Y Combinator and M12 work similarly in offering free compute access for a limited duration through the partnership with Microsoft. The program is meant to provide a sandbox for experimentation — oftentimes, startups are using the GPUs to release their first ever prototypes, said Annie Pearl, a corporate vice president.

“We booked what we thought was a big cluster, but it turns out people need more.”

Although there’s no limit on usage to Andromeda, it too is catered primarily to seed and Series A startups due to its current size, Friedman said. “No one’s cluster is big enough for when your companies grow,” Conviction’s Guo said, noting that if an AI company matures enough to need several thousand chips, it will need to negotiate directly with cloud providers. A number of GPU-specialized cloud providers like CoreWeave, Lambda Labs, Tether and Together are emerging to help broker deals for these mid-sized companies.

But most of the AI investors Forbes spoke to said that GPU demand continues to outstrip supply. And as new AI models like Anthropic’s Claude 2.0 and Google’s Gemini show performance improvements on their smaller-sized predecessors, most leading research labs are deciding to keep at building bigger models. Anthropic raised $750 million last month in an unusual funding round after securing billions last year. Forbes reported in August that a team of former Meta researchers looking to develop an AI language model for biology forecasted needing $477 million of capital in its first three years of existence (more than half of that was projected to be spent on compute).

The GPU shortage may be easing for users that only need smaller clusters, Friedman said, but it continues with big clusters of thousands or tens of thousands of chips. With that in mind, he and Gross are exploring turning Andromeda into an even bigger supercomputer that can be useful for larger companies. “We booked what we thought was a big cluster, but it turns out people need more,” Friedman said. “So we’re exploring the next couple horizons here and seeing if there’s a place for us to make a difference.”