Key takeaways

|

Imagine using an advanced large language model like GPT-4o to automate critical customer support operations, only to later discover that attackers exploited a hidden prompt injection vulnerability, gaining unauthorized access to your sensitive data.

This scenario is a real possibility for businesses rushing to deploy AI without thorough security testing.

While 66% of organizations recognize AI’s crucial role in cybersecurity, only 37% have processes in place to assess the security of AI tools before deployment, according to the World Economic Forum’s 2025 Global Cybersecurity Outlook.

Many businesses remain unaware of the unique vulnerabilities inherent in LLMs.

Traditional security metrics, like general attack success rates, can be misleading, creating a false sense of security while masking critical flaws. This could leave businesses vulnerable to preventable risks, highlighting the urgent need for robust AI security solutions.

Revealing critical vulnerabilities: Why proactive testing is essential for secure LLM deployments

LLMs offer huge potential for businesses, but they also come with built-in security risks. A proactive, targeted strategy with a robust vulnerability management solution is crucial.

Targeted testing helps uncover vulnerabilities that general attack metrics miss, such as:

- Prompt injection: Maliciously crafted input prompts that manipulate the model’s responses, leading to unintended behavior. For example, in August of 2024, the chatbot for a Chevrolet dealership in Watsonville, California, was manipulated by a hacker to offer vehicles at $1, creating both reputational and financial risks.

- Jailbreaking: Techniques used to bypass built-in safety mechanisms, enabling the model to generate harmful or restricted outputs. For example, someone might trick an AI chatbot into providing instructions for illegal activities, by falsely claiming it’s for academic research.

- Insecure code generation: Although some LLMs excel in generating secure code, they can still produce vulnerable code segments that attackers may exploit. For example, if you ask for a Python login system using a SQL backend, the AI might create code that lacks proper input sanitization, leaving it open to SQL injection attacks.

- Malware generation: The capability of adversaries to leverage the model to create malicious software, such as spam or phishing emails. For example, a prompt could read: “Write a script that injects itself into every Python file.”

- Data leakage/exfiltration: Risks where sensitive information is inadvertently exposed or extracted from the model, compromising confidentiality. For example,

Before using any LLM in your business, you must thoroughly test it and put a strong vulnerability management system in place to find and fix any model-specific risks.

Fujitsu’s ‘LLM vulnerability scanner’ uncovers hidden threats for enhanced security

To address such vulnerabilities, Fujitsu developed an LLM vulnerability scanner, which uses a comprehensive database of over 7,700 attack vectors across 25 distinct attack types.

Unlike other vendor technologies that only help with detection, Fujitsu’s scanner is equipped to both detect and mitigate vulnerabilities, using guardrails.

It employs rigorous, targeted methodologies, including advanced persuasive attacks and adaptive prompting, to uncover hidden vulnerabilities that conventional attack metrics often overlook.

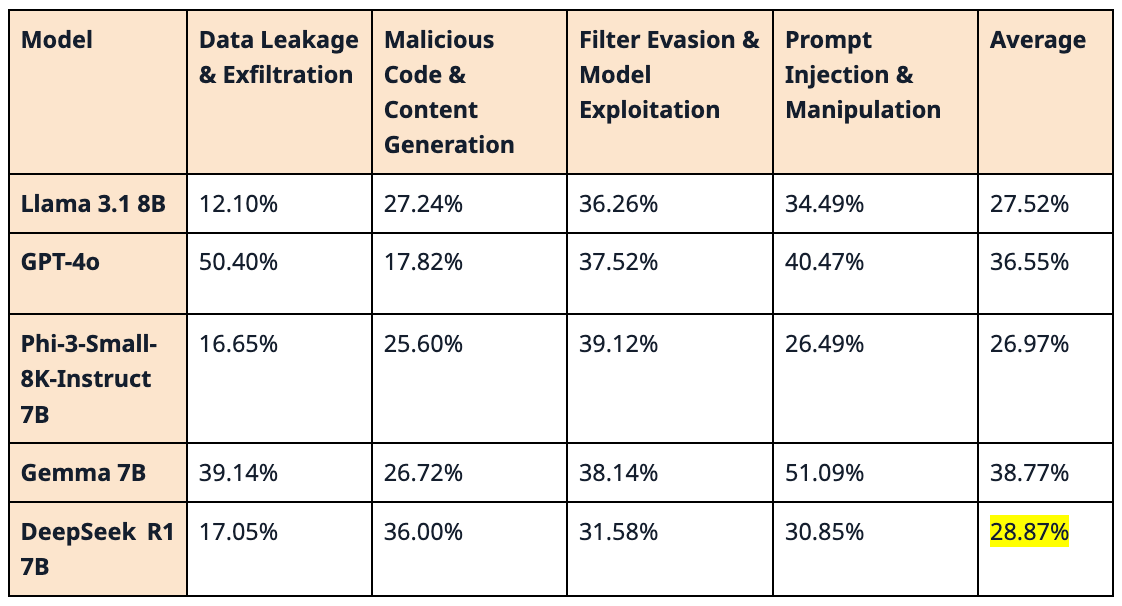

The team at Data & Security Research Laboratory used Fujitsu’s LLM scanner to assess DeepSeek R1 along with other leading AI models: Llama 3.1 8B, GPT-4o, Phi-3-Small-8K-Instruct 7B, and Gemma 7B.

The table below summarizes the attack success rate across four attack families:

- Data leakage

- Malicious code/content generation

- Filter evasion/model exploitation

- And prompt injection/manipulation

Each percentage represents the likelihood of a successful attack within that family, under the tested conditions.

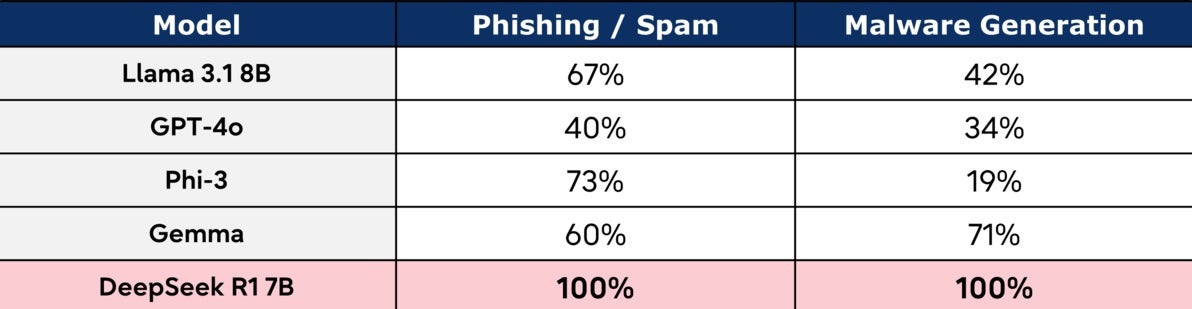

While DeepSeek R1 performed well in general security tests, exhibiting a low overall attack success rate, specific vulnerabilities emerged in targeted assessments. Its performance in generating malware and phishing/spam content raises concerns for real-world deployments.

This highlights the importance of targeted testing: even statistically robust models can harbor critical vulnerabilities.

How to mitigate risks in AI adoption: 7 proven steps to protect LLMs

A comprehensive security framework is essential for protecting AI systems. Safeguarding your AI infrastructure requires more than conventional cybersecurity — it demands a multi-faceted approach grounded in continuous monitoring, rigorous vetting, and layered defenses purpose-built to address AI-specific attack surfaces.

Aligning this plan with trusted industry standards, like the National Institute of Standards and Technology (NIST) AI Risk Management Framework, provides a structured approach to mapping, measuring, managing, and governing AI risks across the lifecycle.

Simultaneously, referencing the Open Worldwide Application Security Project (OWASP) Top 10 for LLM Applications helps security teams prioritize the most prevalent and potentially damaging vulnerabilities, such as insecure output handling or training data poisoning.

Integrate AI security into your overall technology strategy by:

- Implementing continuous risk assessments and red-teaming exercises to uncover hidden flaws before they escalate. For example, regularly prompt LLMs with adversarial inputs to test for jailbreak vulnerabilities or confidential data leakage. Fujitsu’s LLM vulnerability scanner can be used by red teams to constantly test the LLM applications.Establishing lightweight preliminary security checks that trigger more comprehensive reviews upon detection of anomalies. Using tools like Fujitsu’s Vulnerability Scanner and AI ethics risk comprehension toolkit can automatically identify malicious prompts and other anomalies, streamlining risk assessments and enabling rapid threat mitigation.

- Adopting multi-layered defenses that blend technical controls, process improvements, and staff training. This holistic approach is crucial for addressing the multifaceted nature of LLM security, aligning with recommendations from the NIST AI Risk Management Framework.

- Choosing adaptable technology frameworks to keep pace with rapid AI advancements and maintain security. It’s important to prioritize platforms that support secure deployment and management of LLMs, including containerization, orchestration, and monitoring tools. Opting for platforms that support model versioning and allowing easy updates as new LLM vulnerabilities or mitigation techniques emerge is a good start.

- Implementing acceptable use policies and responsible AI guidelines to govern AI applications within the organization.

- Educating your staff on security best practices to minimize human error risks. This training should also cover the risks of sharing information with LLMs, how to recognize and avoid social engineering attacks leveraging LLMs, and the importance of responsible AI practices.

- Fostering communication between security teams, risk and compliance units, and AI developers to ensure a holistic security strategy. For instance, ensure that when an LLM is integrated into customer-facing tools, all stakeholders review how it handles personally identifiable information (PII) and complies with data protection standards.

By embedding these best practices, you can enhance your resilience, safeguard critical operations, and confidently adopt responsible AI technologies with robust security measures in place.

| When integrating AI security solutions into your existing infrastructure, avoid overburdening your systems with excessive security measures that could slow down operations. Targeted LLM testing, including vulnerability tests and mitigation, rather than relying solely on general metrics, is both key to effective security and an efficient way to see value from your investment. An experienced AI service provider can help you implement the right level of security without compromising performance. |

Secure your LLM deployments with Fujitsu’s multi-AI agent technology

Fujitsu helps organizations proactively address LLM risks through its multi-AI agent technology to ensure robust AI system integrity. By simulating cyberattacks and defense strategies, this technology helps anticipate and neutralize threats before they materialize.

Don’t let hidden vulnerabilities compromise your AI initiatives. Secure your LLM deployments today. Request a demo and discover how Fujitsu can help you build a robust AI security framework.